Predict Effects of Alzheimer’s Variants Using ATAC Models#

In this tutorial, we demonstrate how to predict the impact of sequence variation using a trained gReLU model.

import anndata as ad

import pandas as pd

import numpy as np

import os

Load the CATlas model#

This is a binary classification model trained on snATAC-seq data from Catlas (http://catlas.org/humanenhancer/). This model predicts the probability that an input sequence will be accessible in various cell types.

import grelu.resources

model = grelu.resources.load_model(project='human-atac-catlas', model_name='model')

View the model’s metadata#

model.data_params is a dictionary containing metadata about the data used to train the model. Let’s look at what information is stored:

model.data_params.keys()

dict_keys(['tasks', 'train', 'val', 'test'])

for k, v in model.data_params['train'].items():

if k !="intervals":

print(k, model.data_params['train'][k])

bin_size 1

end both

genome hg38

label_aggfunc None

label_len 200

label_transform_func None

max_label_clip None

max_pair_shift 0

max_seq_shift 2

min_label_clip None

n_alleles 1

n_augmented 1

n_seqs 977014

n_tasks 204

padded_label_len 200

padded_seq_len 204

predict False

rc True

seq_len 200

Note the parameter seq_len. This tells us that the model was trained on 200 bp long sequences.

model.data_params['tasks'] is a large dictionary containing metadata about the output tracks that the model predicts. We can collect these into a dataframe called tasks:

tasks = pd.DataFrame(model.data_params['tasks'])

tasks.head(3)

| name | cell type | |

|---|---|---|

| 0 | Follicular | Follicular |

| 1 | Fibro General | Fibro General |

| 2 | Acinar | Acinar |

Load Alzheimer’s Variants from GWAS Catalog (Jansen et al. 2019 meta-analysis)#

Download a small subset of variants from the AD sumstats file from this meta-analysis study. This contains 1,000 variants mapped to the hg19 genome.

import grelu.resources

variant_dir = grelu.resources.get_artifact(

project='alzheimers-variant-tutorial',

name='dataset'

).download()

variant_file = os.path.join(variant_dir, "variants.txt")

variants = pd.read_table(variant_file)

variants.head(3)

| snpid | chrom | pos | alt | ref | rsid | zscore | pval | nsum | neff | direction | eaf | beta | se | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 6:32630634_G_A | chr6 | 32630634 | G | A | 6:32630634 | 3.974476 | 0.000071 | 71639 | 71639.0 | ?+?+ | 0.2237 | 0.025194 | 0.006339 |

| 1 | 6:32630797_A_G | chr6 | 32630797 | A | G | 6:32630797 | 4.040244 | 0.000053 | 71639 | 71639.0 | ?+?+ | 0.2435 | 0.024866 | 0.006155 |

| 2 | 6:32630824_T_C | chr6 | 32630824 | T | C | 6:32630824 | 3.921736 | 0.000088 | 71639 | 71639.0 | ?+?+ | 0.1859 | 0.026630 | 0.006790 |

Filter variants#

from grelu.data.preprocess import filter_blacklist, filter_chromosomes

from grelu.variant import filter_variants

Remove indels, since we don’t support them for now. We also remove variants where one of the alleles contains Ns.

variants = filter_variants(variants, max_del_len=0, max_insert_len=0, standard_bases=True)

Initial number of variants: 1000

Final number of variants: 989

Remove non-standard chromosomes

variants = filter_chromosomes(variants, include='autosomesXY')

Keeping 989 intervals

Remove SNPs from unmappable regions

variants = filter_blacklist(variants, genome="hg19", window=100).reset_index(drop=True)

Keeping 988 intervals

variants.head(3)

| snpid | chrom | pos | alt | ref | rsid | zscore | pval | nsum | neff | direction | eaf | beta | se | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 6:32630634_G_A | chr6 | 32630634 | G | A | 6:32630634 | 3.974476 | 0.000071 | 71639 | 71639.0 | ?+?+ | 0.2237 | 0.025194 | 0.006339 |

| 1 | 6:32630797_A_G | chr6 | 32630797 | A | G | 6:32630797 | 4.040244 | 0.000053 | 71639 | 71639.0 | ?+?+ | 0.2435 | 0.024866 | 0.006155 |

| 2 | 6:32630824_T_C | chr6 | 32630824 | T | C | 6:32630824 | 3.921736 | 0.000088 | 71639 | 71639.0 | ?+?+ | 0.1859 | 0.026630 | 0.006790 |

Predict variant effects#

The grelu.variant module contains several functions related to analysis of variants. The predict_variant_effects function takes a model and a dataframe of variants, and uses the model to predict the activity of the genomic regions containing both the ref and alt alleles. It can then compare the two predictions and return an effect size for each variant. We can also apply data augmentation, i.e. make predictions for several versions of the sequence and average them together.

import grelu.variant

odds = grelu.variant.predict_variant_effects(

variants=variants,

model=model,

devices=0, # Run on GPU 0

num_workers=8,

batch_size=512,

genome="hg19",

compare_func="log2FC", # Return the log2 fold change between alt and ref predictions

return_ad=True, # Return an anndata object.

rc = True, # Reverse complement the ref/alt predictions and average them.

)

making dataset

Predicting DataLoader 0: 100%|█████████████████████████████████████████████████| 8/8 [00:01<00:00, 7.64it/s]

Now this (odds) is an AnnData object (similar to SingleCellExperiment in R but in Python). Might be a good point to pause here and learn more about it: https://anndata.readthedocs.io/en/latest/.

odds

AnnData object with n_obs × n_vars = 988 × 204

obs: 'snpid', 'chrom', 'pos', 'alt', 'ref', 'rsid', 'zscore', 'pval', 'nsum', 'neff', 'direction', 'eaf', 'beta', 'se'

var: 'cell type'

odds.var contains the cell types for which the model makes predictions.

odds.var.head(3)

| cell type | |

|---|---|

| name | |

| Follicular | Follicular |

| Fibro General | Fibro General |

| Acinar | Acinar |

odds.obs contains the variants.

odds.obs.head(3)

| snpid | chrom | pos | alt | ref | rsid | zscore | pval | nsum | neff | direction | eaf | beta | se | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 6:32630634_G_A | chr6 | 32630634 | G | A | 6:32630634 | 3.974476 | 0.000071 | 71639 | 71639.0 | ?+?+ | 0.2237 | 0.025194 | 0.006339 |

| 1 | 6:32630797_A_G | chr6 | 32630797 | A | G | 6:32630797 | 4.040244 | 0.000053 | 71639 | 71639.0 | ?+?+ | 0.2435 | 0.024866 | 0.006155 |

| 2 | 6:32630824_T_C | chr6 | 32630824 | T | C | 6:32630824 | 3.921736 | 0.000088 | 71639 | 71639.0 | ?+?+ | 0.1859 | 0.026630 | 0.006790 |

And odds.X contains the predicted effect size for each variant in a numpy array of shape (n_variants x n_cell types)

print(odds.X[:5, :5])

[[ 1.1112168e+00 1.1200457e+00 1.3333474e+00 1.7155571e+00

1.6624324e+00]

[ 2.9757369e-02 -7.0971392e-02 -6.8057328e-04 1.2972704e-01

6.9289096e-02]

[-5.2687943e-01 -7.6652773e-02 -7.0005655e-01 -5.5351615e-01

-6.9800067e-01]

[ 5.9476948e-01 4.8713604e-01 7.1450031e-01 2.2411114e-01

4.1950190e-01]

[-1.2315573e+00 -8.5888767e-01 -4.4588929e-01 3.2726651e-01

1.3142172e-01]]

Select a variant with effect specific to microglia#

As an example, we search for variants that strongly disrupt accessibility in microglia but not in all cell types.

We set some arbitrary thresholds; The log2 fold change of the alt allele w.r.t the ref allele should be < -2, and the average log2 fold change of the alt allele w.r.t the ref allele should be nonnegative.

This implies that the alt allele should decrease accessibility in microglia to < 1/4 of its reference value, but should not decrease accessibility across all cell types on average.

# Calculate the mean variant effect across all cell types

mean_variant_effect = odds.X.mean(1)

# Calculate the variant effect only in microglia

microglia_effect = odds[:, 'Microglia'].X.squeeze()

specific_variant_idx = np.where(

(microglia_effect < -2) & (mean_variant_effect >= 0)

)[0]

specific_variant_idx

array([498])

We will isolate this microglia specific variant to analyze further.

variant = odds[specific_variant_idx, :]

variant

View of AnnData object with n_obs × n_vars = 1 × 204

obs: 'snpid', 'chrom', 'pos', 'alt', 'ref', 'rsid', 'zscore', 'pval', 'nsum', 'neff', 'direction', 'eaf', 'beta', 'se'

var: 'cell type'

variant.obs

| snpid | chrom | pos | alt | ref | rsid | zscore | pval | nsum | neff | direction | eaf | beta | se | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 498 | 6:76659840_T_G | chr6 | 76659840 | T | G | rs138940043 | 4.009219 | 0.000061 | 363637 | 363637.0 | ??+? | 0.00656 | 0.058235 | 0.014525 |

Let’s look at the effect size (log2FC) for this variant:

variant_effect_size = variant[0, "Microglia"].X

variant_effect_size

ArrayView([[-2.7398472]], dtype=float32)

View importance scores in microglia for the bases surrounding the selected variant#

We can use several different approaches to calculate the importance of each base in the reference and alternate sequence. However, we want to score each base specifically by its importance to the model’s prediction in microglia and not the model’s predictions on other tasks.

To do this we define a transform that operates on the model’s predictions and selects only the predictions in Microglia.

from grelu.transforms.prediction_transforms import Aggregate

microglia_score = Aggregate(tasks=["Microglia"], model=model)

Next, we extract the 200 bp long genomic sequences containing the reference and alternate alleles.

ref_seq, alt_seq = grelu.variant.variant_to_seqs(

seq_len=model.data_params['train']['seq_len'], # Create variant-centered sequence of this length

genome='hg19',

**variant.obs.iloc[0][["chrom", "pos", "ref", "alt"]]

)

ref_seq, alt_seq

('CAAAGATATATGTCATAATAATTATCTTTTCACTTTCTTTGTTAGGGACCAGGAATGATAAACCACTTAGTCATTTTTTAGGTTTACAAGAACTTAAGGGGAACTAAGAAAGGAACCCTTACTCCTGAACTCTCAGCCTCATCTGTGCTGGACCATTCTAACTTTGTACCCTTTCATGAGATTGATATAATTTAGAAAAT',

'CAAAGATATATGTCATAATAATTATCTTTTCACTTTCTTTGTTAGGGACCAGGAATGATAAACCACTTAGTCATTTTTTAGGTTTACAAGAACTTAAGGTGAACTAAGAAAGGAACCCTTACTCCTGAACTCTCAGCCTCATCTGTGCTGGACCATTCTAACTTTGTACCCTTTCATGAGATTGATATAATTTAGAAAAT')

We are now ready to calculate the per-base importance scores. For this, we use the grelu.interpret.score module, which contains functions related to scoring the importance of each base in a sequence. We choose the “inputxgradient” method to score each base.

import grelu.interpret.score

ref_attrs = grelu.interpret.score.get_attributions(

model, ref_seq, prediction_transform=microglia_score, device=0,

seed=0, method="inputxgradient",

)

alt_attrs = grelu.interpret.score.get_attributions(

model, alt_seq, prediction_transform=microglia_score, device=0,

seed=0, method="inputxgradient",

)

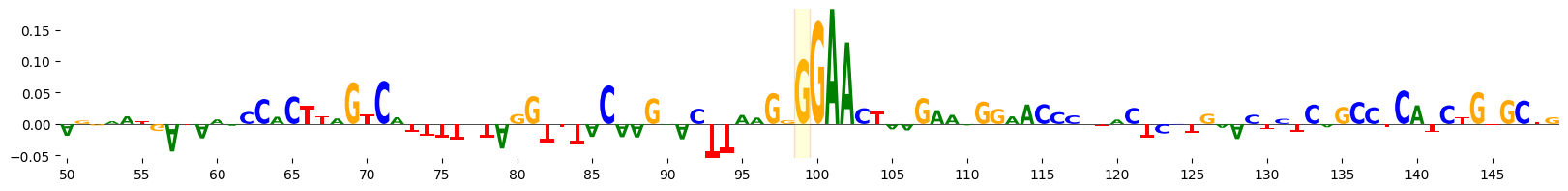

We can visualize the attribution scores for the sequence, highlighting the mutated base. Here, we visualize the attributions for the central 100 bp of the 200 bp sequence.

import grelu.visualize

grelu.visualize.plot_attributions(

ref_attrs, start_pos=50, end_pos=150,

highlight_positions=[99], ticks=5,

edgecolor='red'

)

<logomaker.src.Logo.Logo at 0x7f4173ba3b10>

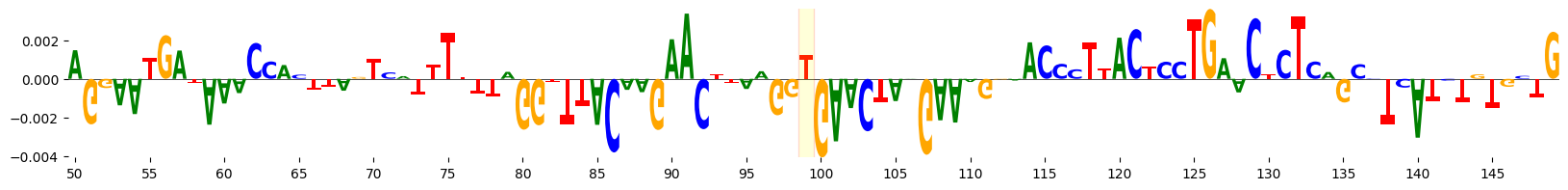

grelu.visualize.plot_attributions(

alt_attrs, start_pos=50, end_pos=150,

highlight_positions=[99], ticks=5,

edgecolor='red',

)

<logomaker.src.Logo.Logo at 0x7f4173868550>

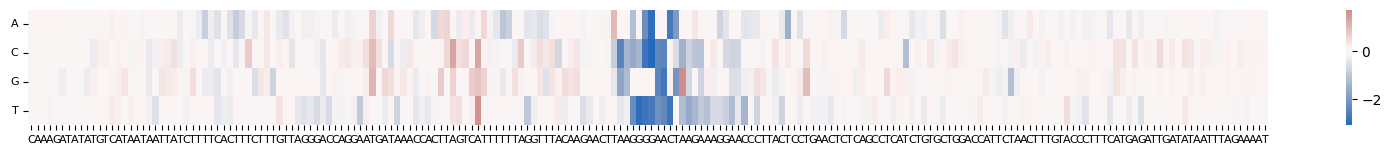

ISM#

We can also perform ISM (In silico mutagenesis) of the bases surrounding the variant to see what the effect would be if we mutated the reference allele to any other base. The ISM_predict function in grelu.interpret.score performs every possible single-base substitution on the given sequence, predicts the effect of each substitution, and optionally compares these predictions to the reference sequence to return an effect size for each substitution.

Once again, since we are interested in how important each base is to the model’s prediction in microglia,

we use the microglia_score transform. This ensures that ISM will score each base’s importance to the

microglial prediction only.

ism = grelu.interpret.score.ISM_predict(

ref_seq,

model,

prediction_transform=microglia_score, # Focus on the prediction in microglia

compare_func = "log2FC", # Return the log2FC of the mutated sequence prediction w.r.t the reference sequence

devices=0, # Index of the GPU to use

num_workers=8,

)

Predicting DataLoader 0: 100%|███████████████████████████████████████████████| 13/13 [00:00<00:00, 48.07it/s]

ism

| C | A | A | A | G | A | T | A | T | A | ... | T | T | T | A | G | A | A | A | A | T | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| A | 0.058220 | 0.000000 | 0.000000 | 0.000000 | -0.002522 | 0.000000 | -0.061980 | 0.000000 | 0.039374 | 0.000000 | ... | 0.022865 | -0.201576 | -0.112543 | 0.001650 | -0.101725 | 0.001650 | 0.001650 | 0.001650 | 0.001650 | 0.037523 |

| C | 0.000000 | -0.025550 | -0.000935 | -0.064350 | -0.059129 | -0.027579 | -0.078199 | -0.031715 | -0.049933 | -0.091570 | ... | 0.178785 | -0.174459 | 0.084045 | 0.150017 | -0.071348 | 0.215759 | 0.111641 | 0.128501 | 0.096332 | 0.112648 |

| G | 0.024304 | 0.015685 | -0.011021 | -0.106040 | 0.000000 | -0.299975 | -0.042261 | -0.045126 | -0.057420 | -0.320149 | ... | 0.073225 | 0.003626 | 0.060202 | 0.128809 | 0.001650 | 0.121120 | 0.076424 | 0.069966 | 0.043495 | 0.112302 |

| T | 0.026044 | -0.029737 | 0.037190 | -0.140797 | 0.032200 | -0.049836 | 0.000000 | -0.112758 | 0.000000 | -0.053747 | ... | 0.000000 | 0.000000 | 0.001650 | -0.039917 | -0.161802 | 0.090020 | -0.189904 | -0.020406 | -0.037939 | 0.001650 |

4 rows × 200 columns

grelu.visualize.plot_ISM(

ism, method="heatmap", center=0, figsize=(20, 1.5),

)

<Axes: >

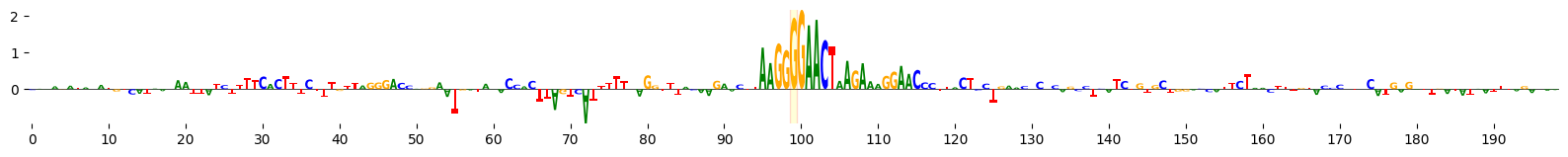

grelu.visualize.plot_ISM(

ism, method='logo', figsize=(20, 1.5), highlight_positions=[99], edgecolor='red'

)

<logomaker.src.Logo.Logo at 0x7f41766838d0>

Scan variant with JASPAR consensus motifs#

We now scan the sequences immediately around the variant to identify known TF motifs that may have been disrupted by the variant.

# Select the central bases of the sequence

central_ref_seq = grelu.sequence.utils.resize(ref_seq, 18)

central_alt_seq = grelu.sequence.utils.resize(alt_seq, 18)

central_ref_seq, central_alt_seq

('ACTTAAGGGGAACTAAGA', 'ACTTAAGGTGAACTAAGA')

We can use the grelu.interpret.motifs module to scan these sequences with TF motifs. Here, we use a reference set of non-redundant motifs (https://www.vierstra.org/resources/motif_clustering) that are provided with gReLU as consensus.

# Scan with motifs

import grelu.interpret.motifs

scan = grelu.interpret.motifs.compare_motifs(

ref_seq=central_ref_seq,

alt_seq=central_alt_seq,

motifs="hocomoco_v12",

pthresh=5e-4,

)

scan

| sequence | motif | start | end | strand | alt | ref | foldChange |

|---|---|---|---|---|---|---|---|

| 0 | ISL2.H12CORE.0.SM.B | 0 | 11 | + | 0.000000 | 9.111901 | 0.0 |

| 1 | MZF1.H12CORE.0.P.B | 3 | 15 | + | 0.000000 | 9.261309 | 0.0 |

| 2 | SPI1.H12CORE.0.P.B | 2 | 16 | + | 0.000000 | 6.679440 | 0.0 |

| 3 | SPI1.H12CORE.1.S.B | 4 | 16 | + | 0.000000 | 11.517704 | 0.0 |

| 4 | SPIB.H12CORE.1.S.C | 2 | 15 | + | 0.000000 | 5.641884 | 0.0 |

| 5 | SPIB.H12CORE.2.SM.B | 0 | 16 | + | 0.000000 | 4.544859 | 0.0 |

| 6 | ERR1.H12CORE.0.PSM.A | 1 | 12 | + | 8.008491 | 0.000000 | inf |

| 7 | NR4A2.H12CORE.0.SM.B | 2 | 12 | + | 8.064205 | 0.000000 | inf |

The ref column contains the score for the motif in the reference allele-cintaining sequence and the alt column contains the score in the alternate-allele containing sequence.

Note that the SPI1.H12CORE.0.P.B motif is lost in the alternate allele-containing sequence.

Compare the variant impact to a background distribution#

We saw that the variant has a strong effect size (log2 fold change). To place this effect size in context, we create a set of background sequences by shuffling the sequence surrounding the variant while conserving dinucleotide frequency. We then insert the reference and alternate alleles in each shuffled sequence, and compute the variant effect size again.

test = grelu.variant.marginalize_variants(

model=model,

variants=variant.obs,

genome="hg19",

prediction_transform=microglia_score,

seed=0,

devices=0,

n_shuffles=100,

compare_func="log2FC",

rc=True, # Average the result over both strands

)

Predicting variant effects

Predicting DataLoader 0: 100%|█████████████████████████████████████████████████| 1/1 [00:00<00:00, 15.52it/s]

Predicting variant effects in background sequences

Predicting DataLoader 0: 100%|█████████████████████████████████████████████████| 4/4 [00:00<00:00, 42.68it/s]

Calculating background distributions

Performing 2-sided test

test

{'effect_size': [-2.742067337036133],

'mean': [0.009891723282635212],

'sd': [0.3251914381980896],

'pvalue': [2.615225515668632e-17]}